Google Enhances AI Offerings with Gemini Updates

In a recent series of announcements, Google has unveiled substantial updates to its Gemini AI models, promising advancements aimed at better performance and reliability for both users and developers. Amid the emergence of new competitors like DeepSeek and innovative updates from OpenAI, the momentum in AI development continues at a rapid pace.

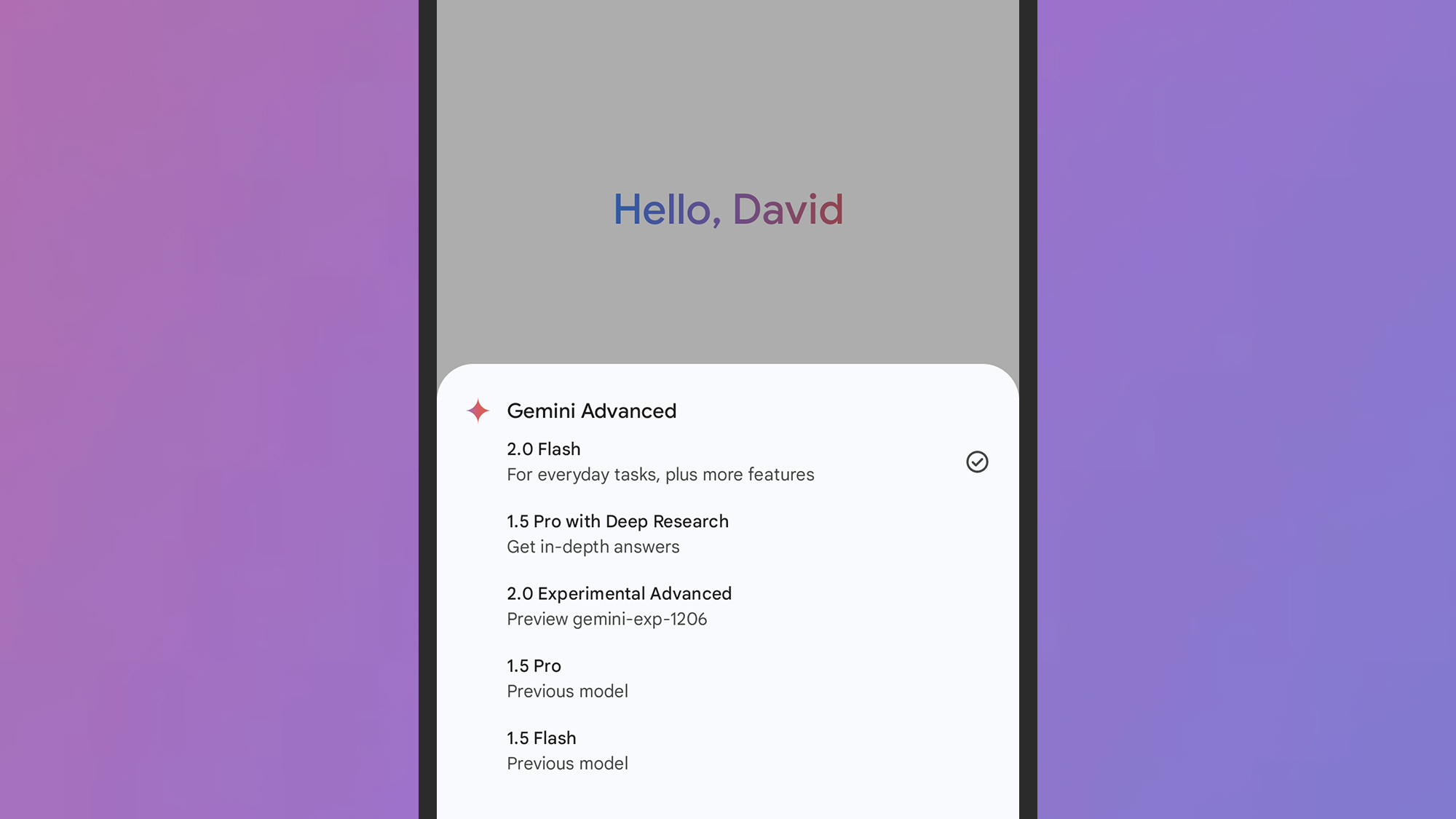

The Gemini 2.0 Flash model, which made its debut in December for a limited audience, is now available to all users. This model can be found in the Gemini applications on both desktop and mobile platforms—many may have already experienced its benefits as its rollout began last week. These Flash models are engineered to be more agile and lightweight, minimizing any compromises in performance.

Additionally, a new experimental version called the Gemini 2.0 Flash Thinking model is being offered for public testing. This innovative “reasoning” model allows the AI to demonstrate its thought processes while generating responses, aiming for enhanced accuracy and transparency, similar to features found in ChatGPT.

This model is also customizable for users accessing various applications, including Google Search, Google Maps, and YouTube, offering real-time web information as well as relevant data from Google Maps (such as travel times and location specifics) and content from YouTube videos.

Lastly, developers also gain access to the Gemini 2.0 Flash-Lite model. This is the most budget-friendly variant within the Gemini lineup, designed to attract developers creating tools with Gemini while maintaining a high performance across diverse inputs, such as text and images.

Advanced Pro Models

Credit: DailyHackly

Next in line is the more sophisticated Gemini 2.0 Pro Experimental model. Although slightly slower than its Flash counterparts, it excels in reasoning, writing, programming, and problem-solving capabilities. This model is now available in its experimental stage for developers and those subscribed to Gemini Advanced at a monthly fee of $20.

According to Google, this model boasts superior coding capabilities and is adept at managing complex prompts, demonstrating a better grasp of global knowledge than previous releases. It is capable of processing inputs of up to two million tokens per prompt—equivalent to approximately 1.4 million words or double the length of the Bible.

This capacity marks a significant improvement over the Gemini 2.0 Flash models, which only accommodate half that amount. Google has shared performance metrics, revealing scores of 71.6%, 77.6%, and 79.1% on the MMLU-Pro benchmark for the Gemini 2.0 Flash-Lite, 2.0 Flash, and 2.0 Pro respectively, compared to 67.3% for the 1.5 Flash and 75.8% for the 1.5 Pro.

Similar enhancements are evident across various AI benchmarks, with the Gemini 2.0 Pro Experimental achieving a commendable score of 91.8% on a leading mathematics assessment—outperforming the 2.0 Flash’s score of 90.9%, 86.8% for Flash-Lite, 86.5% for 1.5 Pro, and 77.9% for 1.5 Flash.

As is customary with AI model launches, comprehensive details about the training data, hallucination risks, inaccuracies, and energy usage have not been extensively disclosed. Nevertheless, Google asserts that the latest Flash models demonstrate increased efficiency and improved capabilities in processing feedback to minimize safety and security vulnerabilities.