Apple’s AI Missteps and Future Directions

Even the most devoted Apple enthusiasts acknowledge the company’s challenges in the realm of artificial intelligence. The highly anticipated upgrade for Siri, branded as Apple Intelligence, has faced a delay that many had not expected, especially given its strong promotion last year. A recent post from Apple discusses strategies intended to regain momentum in their AI developments.

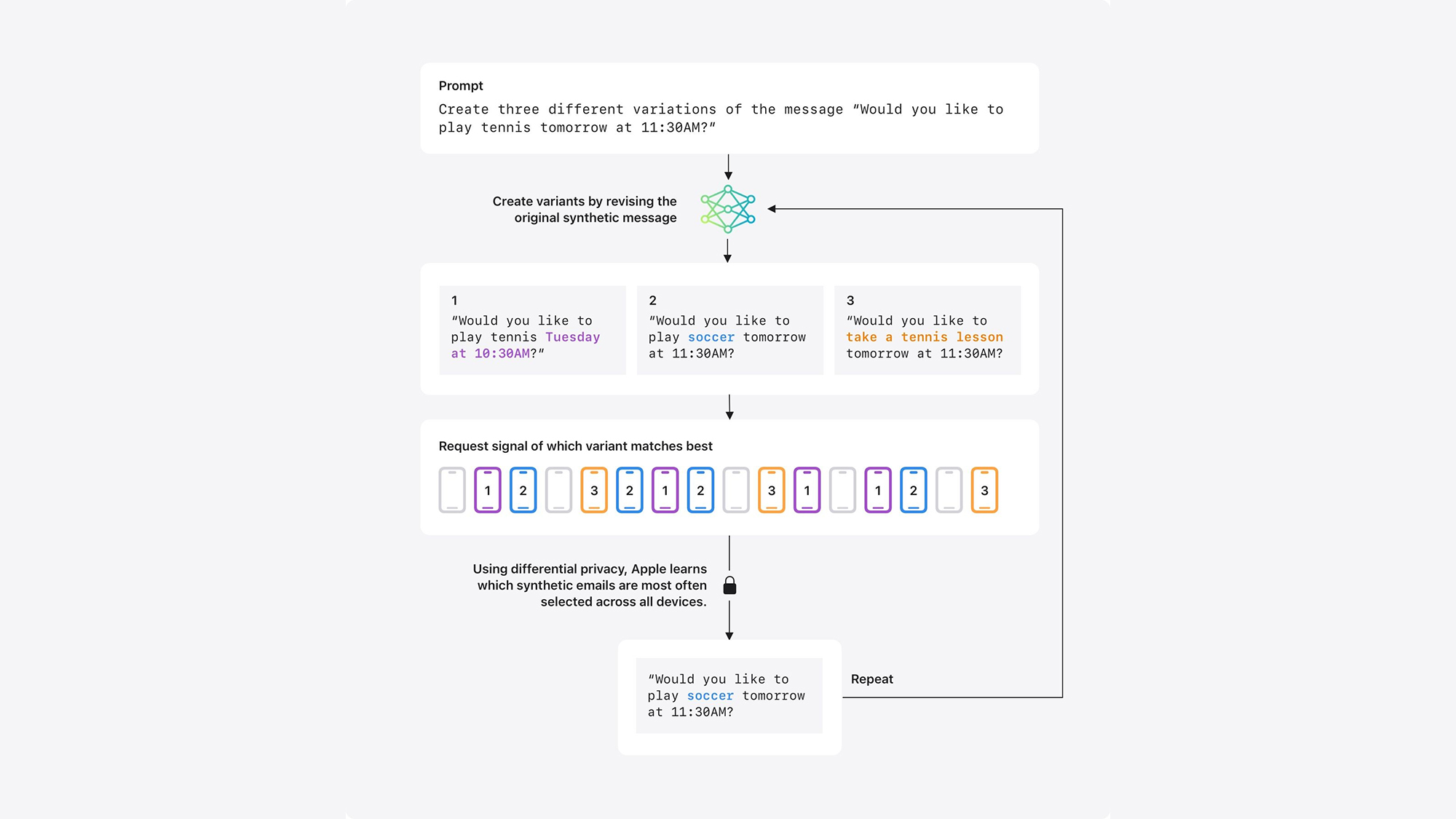

One factor contributing to Apple’s difficulties in generative AI could be its emphasis on user privacy, which surpasses the practices of competitors like OpenAI and Google. Apple refrains from utilizing user data for training its large language models (LLMs), opting instead to rely on publicly available text. Their approach includes extensive use of synthetic data to generate text from prompts and pre-existing content.

The challenge with synthetic data lies in its inherent artificiality. Lacking the depth and diversity of authentic human writing, it can be challenging to evaluate the effectiveness of AI-generated text when there is no actual writing available for comparison. The company has signaled its intentions to enhance text generation capabilities moving forward.

In essence, Apple plans to measure AI-generated text against a variety of actual user writings stored on devices, ensuring multiple safeguards to protect user identities and prevent personal messages from being sent back to Apple. This methodology involves evaluating the quality of synthetic text by juxtaposing it with real writing samples, with only the aggregated results returned to Apple.

Interestingly, this evaluation process does not involve direct analysis of text. Both the synthetic outputs and genuine writings are transformed into “embeddings,” which are mathematical representations capturing the essence of the text. This technique enables quality assessment without the need for in-depth reading of the content.

All shared data is encrypted during transmission, and comparisons are conducted solely on devices where users have consented to Device Analytics. This option can be found under Privacy & Security > Analytics & Improvements in iOS Settings. Apple remains unaware of which specific AI samples are being analyzed by individual devices, focusing instead on performance across all connected devices.

Genmoji and Other Innovations

Credit: Apple

This anonymized evaluation method aims to refine the text generated or rephrased by Apple’s AI models, enhancing the accuracy of summaries provided as well. The highest-ranking outputs may undergo slight modifications before being re-evaluated.

A more streamlined version of this technique is already in use within the Genmoji feature, allowing users to create imaginative emojis like an octopus surfing or a cowboy wearing headphones. The system aggregates submissions from multiple devices to identify popular prompts while ensuring that individual requests remain anonymous and disconnected from specific users.

Once again, participation in this process is exclusive to users with Device Analytics enabled on their iPhone, iPad, or Mac. By collecting “noisy” signals devoid of any identifiable user information, Apple can bolster its AI models using aggregate insights while ensuring privacy for Genmoji prompts.

Similar methodologies are expected to be employed in future Apple Intelligence features—including Image Playground, Image Wand, Memories Creation, Writing Tools, and Visual Intelligence—some of which represent the first successful AI implementations on Apple devices.

According to reports from Bloomberg, these upgraded systems will be trialed in the forthcoming beta versions of iOS 18.5, iPadOS 18.5, and macOS 15.5. More details regarding these systems and Apple Intelligence may emerge during the annual Apple Worldwide Developers Conference, commencing on June 9.

Meanwhile, competitors within the AI sector continue to advance rapidly. Companies like Microsoft, Google, and OpenAI are launching various updates, seemingly unrestrained by privacy considerations, utilizing user-generated text as training material for their AI models.