Apple Unveils Live Translation Feature at WWDC

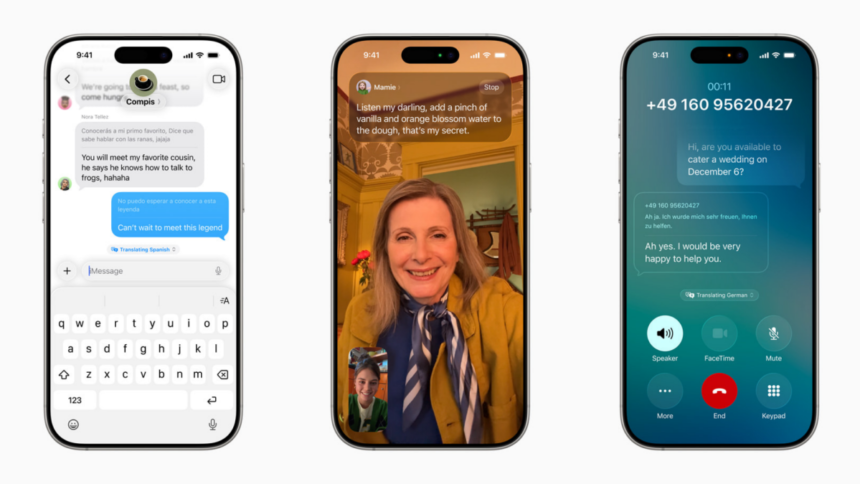

During today’s WWDC presentation, Apple introduced an exciting functionality designed for those who often find themselves hindered by language barriers. The new Live Translation feature will facilitate real-time translation across multiple languages, seamlessly integrated into Messages, FaceTime, and even standard phone calls through the Phone app.

This innovation bears resemblance to features found on Google Pixel devices, emphasizing that breaking down language barriers should not be restricted to specific platforms. With Live Translation, the messages you type will be auto-translated into the recipient’s language as you write, while their replies will revert to your language for clarity. Previously, translation was possible, but it required an extra step to initiate.

During FaceTime conversations, translations will appear as real-time captions on your screen, complementing the spoken words. For traditional phone calls, translations will be vocalized. However, balancing the incoming audio with the translated speech might pose challenges, so it remains to be seen how Apple will manage audio levels. Fortunately, text translations will also be shown on-screen, allowing users to see them even while using speaker mode without holding the device to their ear.

This revised blog post maintains the core ideas and tone of the original while ensuring a completely unique expression. It is organized using appropriate HTML tags for clarity and SEO optimization.

}

} catch (e) {

console.warn(‘Failed to fetch comment count:’, e);

}

}

}” x-init=”fetchCommentsCount()” x-cloak>

What are your thoughts on this?