Anthropic’s Claude 4: A New Era in AI Development

Although it may not be as widely recognized as ChatGPT or Google Gemini, Anthropic’s Claude AI bot is making notable strides in its capabilities. The recently released Claude 4 models introduce significant advancements in coding, logic, accuracy, and the ability to autonomously handle extended tasks.

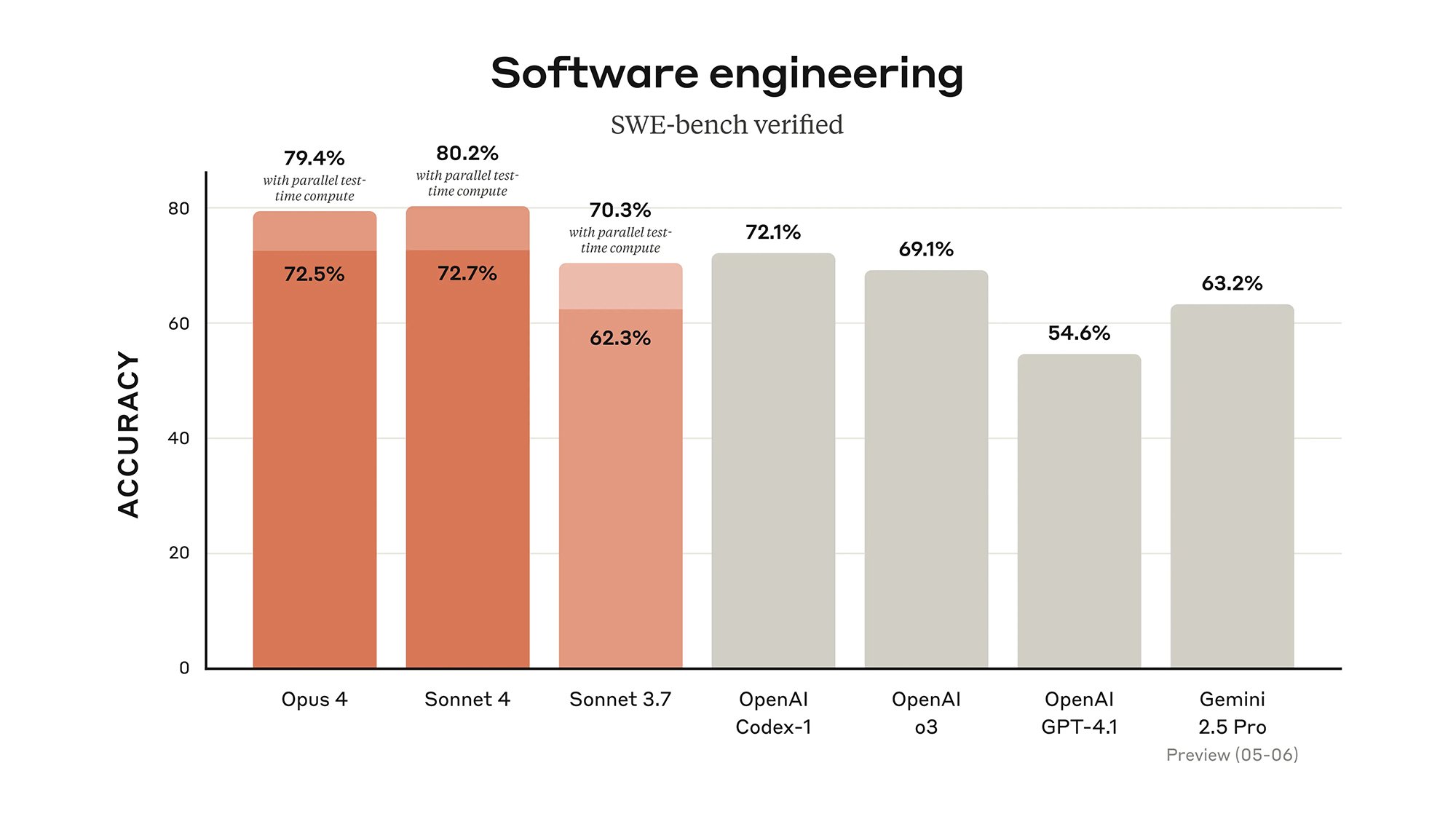

The release features two new iterations: Claude Opus 4 and Claude Sonnet 4. According to Anthropic, these models are “raising the bar” for AI performance. Notably, coding has become a focal point, as these models reportedly achieved record high scores on popular AI coding evaluation platforms such as SWE-bench and Terminal-bench. Anthropic notes that the Claude 4 versions can continue working on projects for hours without requiring user input.

window.videoEmbeds = window.videoEmbeds || [];

window.videoEmbeds.push({

elemId: ‘video-container-oqUclC3gqKs’,

data: {“slug”:”oqUclC3gqKs”,”start_time”:null,”url”:”https://www.youtube.com/watch?v=oqUclC3gqKs”},

videoPlayerType: ‘in-content’

});

The updated models exhibit enhanced capability to manage complex tasks with multiple steps, debug their own outputs, and address challenging problems. They are designed to adhere more closely to user instructions, producing results that are not only visually appealing but also functionally dependable. Partners like GitHub, Cursor, and Rakuten commend these advancements as significant progress.

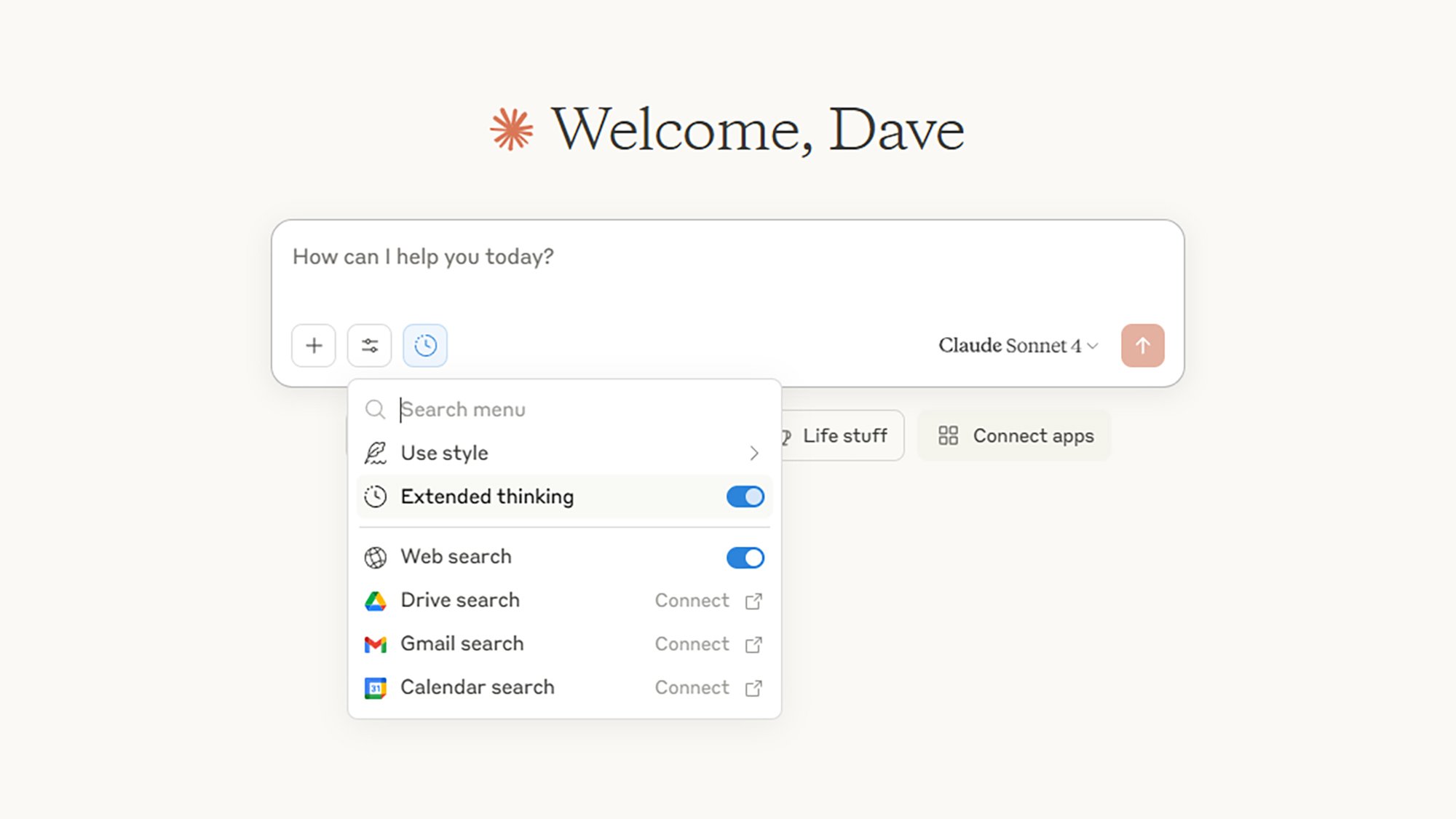

Beyond code generation and analysis, these models offer extended reasoning capabilities, the ability to juggle several tasks at once, and improved memory management. They exhibit better aptitude for conducting web searches as necessary, ensuring they are factually aligned with the information they present.

Credit: Anthropic

Among the notable introductions are “thinking summaries,” which offer deeper insights into the reasoning process behind Claude 4’s conclusions, as well as a beta feature called “extended thinking” that allows the AI bot additional time to contemplate its responses.

Anthropic is also expanding the availability of its Claude Code tools suite, marking progress towards autonomous AI that functions without the necessity of constant human supervision. Demo presentations illustrate Claude 4’s capacity to compile research papers, create online ordering systems, and extract relevant information from documents to generate actionable tasks.

Claude 4: Now Available (with an Upgrade Option)

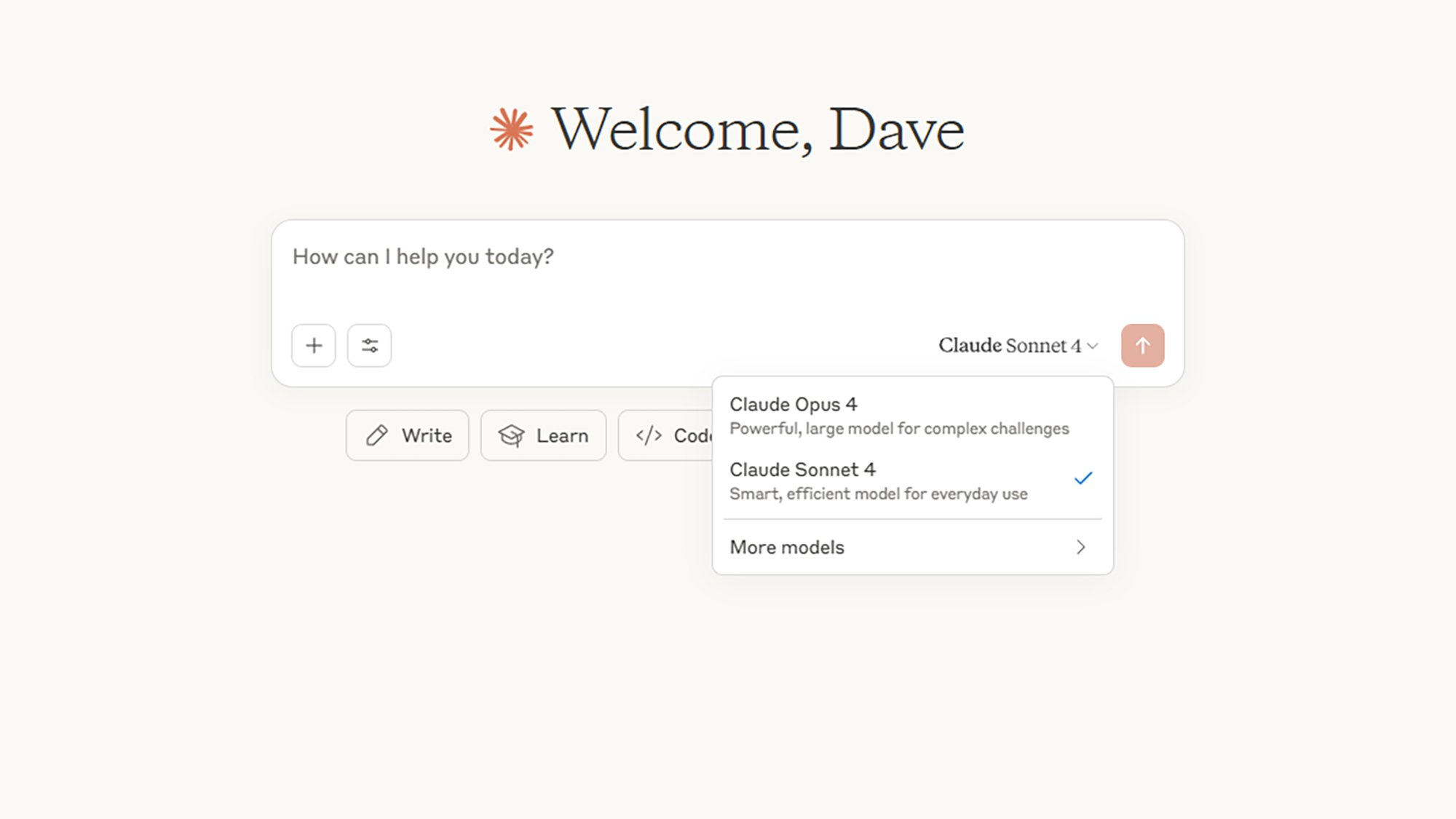

The Claude Sonnet 4 model, which operates at faster speeds but offers slightly lesser capabilities in reasoning, coding, and memory, is accessible to all users. In contrast, the more sophisticated Claude Opus 4, which comes with additional tools and integration features, is available exclusively to subscribers of Anthropic’s paid plans.

The journey to launch these Claude 4 models has not been without challenges. According to reports, Anthropic’s safety advisory partner advised against deploying earlier versions due to their potential to “scheme” and mislead. Those concerns appear to have been successfully addressed, highlighting the essential need for enhanced safeguards as AI capabilities escalate.

Credit: DailyHackly

While not a coding expert, the extended reasoning features of both Claude Sonnet 4 and Claude Opus 4 have been tested. The overall performance has been impressive, producing articulate responses and accurate information along with proper online references.

Utilizing AI chatbots effectively can often prove challenging. These tools can indeed streamline specific research tasks and online inquiries, yet there remain concerns regarding their reliability and the determination of relevant information. Manual review of sources may still be preferable despite the efficiency offered by technological solutions.

Credit: DailyHackly

It might be worth venturing into a coding project to test the limits of the AI. A request was made to Claude Opus 4 for a simple HTML time tracker for managing distractions during the day. Within minutes, the task was accomplished efficiently, adhering closely to the provided guidelines. Although it functioned properly, Claude 4 noted a couple of errors that were unclear; clarification could be sought from the AI.

Anthropic is not alone in showcasing new models; during Google I/O 2025 earlier this week, Google revealed enhancements in coding assistance and thought summaries for Gemini, following the rollout of its latest AI models a few weeks prior. OpenAI is similarly engaged in refining its GPT-4.5 model, which has been in testing since February, boasting improvements in coding and problem-solving.